How to Combat AI Hallucination in Medical Writing

Oct 12, 2024The article discusses strategies to mitigate AI hallucinations in medical writing, a critical issue where AI models generate inaccurate or fabricated content. Key solutions include using RAG-powered knowledge bases to provide accurate context, selecting large language models with strong factual performance, applying human-in-the-loop verification, and incorporating contextual prompt tuning and built-in fact-checking. AuroraPrime Create, a tool integrated with Microsoft Word 365, offers these features to ensure the reliability and accuracy of AI-assisted clinical documentation.

The medical writing industry is increasingly leaning towards integrating AI to streamline documentation processes. However, these opportunities come with significant challenges.

One of the challenges facing medical writers is AI hallucination. AI hallucination is a phenomenon where the model output is fabricated and not grounded by either the provided context or world knowledge. This can be particularly problematic in clinical documentation. Imagine an AI referencing studies, clinical trials, or research papers that don't exist. The consequences of such hallucinations can be grave, leading to misinformation and potentially harmful decisions in medical practice.

Why AI Hallucination Happens

Training Data Limitations: AI models are trained on extensive datasets available up to a specific cut-off date. When the model encounters topics with limited or inconsistent training data, it may "hallucinate" by generating content that sounds plausible but is inaccurate.

Ambiguous or Insufficient Input: Ambiguous or insufficient input undermines the AI’s ability to accurately understand and respond, often degrading output quality and leading to hallucinated content. Ensuring clear, specific, and sufficient input can greatly improve the quality and reliability of AI-generated outputs.

Complexity of Medical Language: Medical writing often involves highly technical language, complex relationships between terms, and specific regulatory standards, which increases the risk of hallucination if the AI doesn't fully understand these intricacies. Unlike generic knowledge, the knowledge required for creating clinical documents is exclusive to related organizations or even study-specific.

Model Configuration: The default temperature of the Large Language Model (LLM) being used may dictate its creativity, which may not be configured by the end user. This can lead to more imaginative but less accurate outputs.

Address AI Hallucination in Medical Writing Using AuroraPrime Create

Seamlessly integrated with Microsoft Word 365, AuroraPrime Create by AlphaLife Sciences is a Word add-in that harnesses generative AI to assist medical writers in creating clinical documentation.

RAG-powered Knowledge Base

RAG (Retrieval-Augmented Generation) is a widely-used technique to enhance AI outputs by retrieving relevant documents and using them as additional context for generation. AuroraPrime Create enables you to easily build a corporate knowledge base that supports AI-driven content generation, seamlessly integrating it into your clinical documentation workflow.

When generating an initial draft document from a template in AuroraPrime Create, you can upload related documents for content reuse and repurposing. With AuroraPrime Create, you can effortlessly build a RAG-powered knowledge base, containing all relevant study information, to streamline your documentation process.

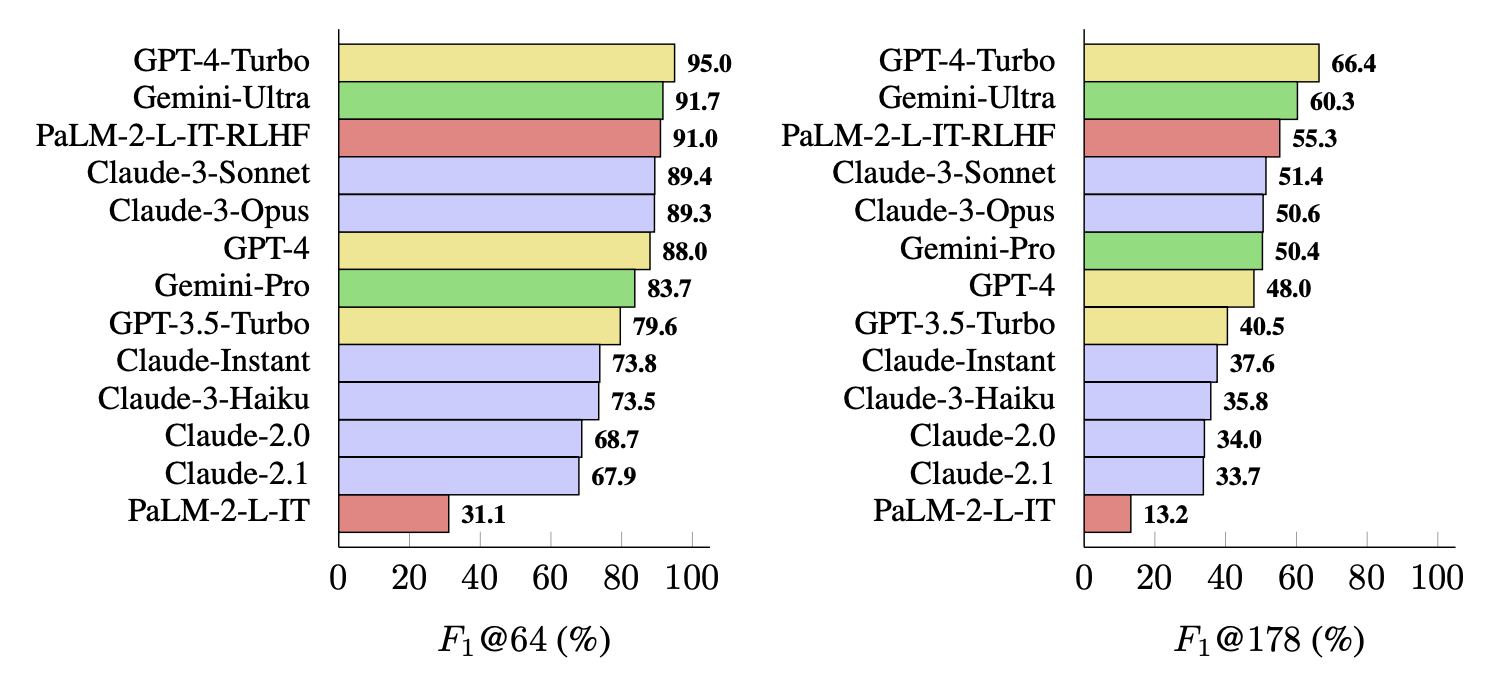

Use a Large Language Model with Higher Long-form Factuality Performance

AuroraPrime Create gives you the flexibility to select the LLM that best suits your evolving content needs, ensuring you always use the model with the highest factual accuracy.

(Long-form factuality performance, measured for a list of mainstream models, using 250 random prompts from LongFact-Objects from LongFact benchmark. Image source: Wei et al. 2024)

(Long-form factuality performance, measured for a list of mainstream models, using 250 random prompts from LongFact-Objects from LongFact benchmark. Image source: Wei et al. 2024)

Human-in-the-Loop (HITL)

Using a Human-in-the-Loop (HITL) approach can effectively prevent AI hallucinations by incorporating human oversight at key decision-making stages, ensuring greater accuracy and reliability. AuroraPrime Create introduces human verification steps in certain specific processes and provides fast and efficient verification methods to compensate for the shortcomings of AI-generated outputs.

Contextual Prompt Tuning

Mainstream large language models possess an inherent capacity for self-awareness. AuroraPrime Create enhances this capability with built-in prompts specifically tuned to provide the appropriate context, further strengthening the model's self-knowledge. Users can also input additional prompts when generating AI-driven content, such as TFL summaries. This customization ensures that the AI model is aligned with the unique requirements and standards of the organization, further reducing the risk of hallucination.

Built-In Fact-Checking

Always verify AI-generated medical content against reliable data sources or knowledge bases, ensuring that referenced studies or clinical trials are real and accurately summarized.

The AuroraPrime Create add-in can automatically validate the accuracy and consistency of TFL summary objects against the TFL data, ensuring the summaries are coherent and aligned with their corresponding TFLs.

Wrapping Up

AI hallucination poses a significant challenge in medical writing, but tools like AuroraPrime Create offer robust solutions to mitigate these risks. By leveraging RAG-powered knowledge bases, choosing the right LLM, and incorporating rigorous fact-checking, medical writers can ensure the accuracy and reliability of their clinical documentation. As the industry continues to evolve, staying informed and utilizing advanced tools will be key to maintaining high standards in medical writing.